The Gap Between Human and Software Interfaces

Today, technological advancements have made computing devices more powerful and portable. These devices are meant to facilitate its users in any possible manner in exact accordance with technological achievements. However, the meager level of communication between humans and machines has broadened the unwanted gap between technological advancements and their output in terms of efficiency. This issue has concerned computer scientists and researchers in the field of Human-Computer Interaction (HCI) and now they are focusing more and more on getting the maximum efficiency. No one will disagree with the statement that machines have to do the maximum hard work, not humans.

The main aim of the HCI, as the name suggests, is to investigate various efficient techniques for improved interaction between humans and machines [1] [2]. This interaction still needs more research because machines are not as intelligent as humans are. However, machines can be made intelligent for some tasks or fragments by feeding them some knowledge and reasoning capabilities using Artificial Intelligence (AI).

Minimizing the Expectation Gap

One of the main aims of HCI is to improve the usability of the software. If a user is able to use software efficiently, the efficiency of the overall system will be directly improved. To anticipate effective interfaces for software, a design must understand the behavior of a user. This is not a simple problem to tackle as different users will behave differently while using the same software. It can only be accomplished if the software is made proficient by observing the user’s behavior first and then dynamically adapts and provides a vital interface to the user. Thus, automatic changes in the interface of software according to users’ behavior are termed adaptive interfaces.

To further illustrate the idea consider the example of an employee; on weekdays a user uses his mobile phone to call his wife between 5:10 PM to 5:20 PM as they go home together. The mobile phone can learn this pattern and on Monday to Friday, between 5:10 PM to 5:20 PM, when the user unlocks the screen and selects the call option, it should suggest calling his wife. This way, the user only needs to press the OK button. This article is not specific to a single platform or application and the proposed idea can be implemented on any software system comprising user interfaces.

How to Minimize the Gap

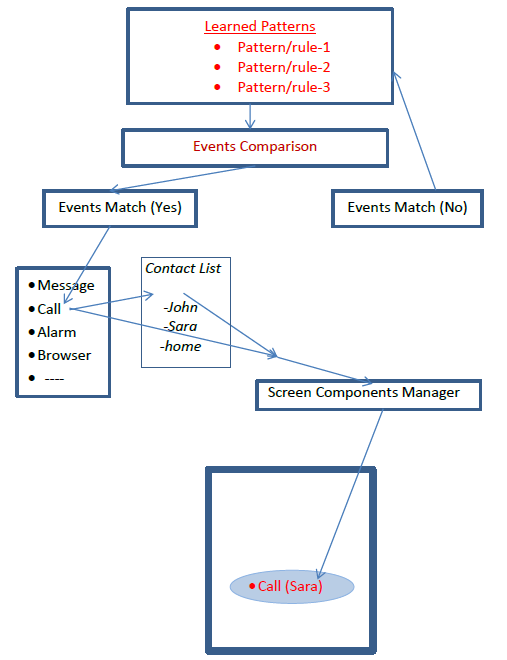

For this purpose, a modular approach can be adapted to design an interface where main interface components like buttons, menus, and lists can be independently called on a screen as suggested by the output of the learned patterns. These patterns can be classified using platform compatible tools. The Scikit [2], which is python based, can deal with supervised and unsupervised algorithms. Using a supervised learning approach the algorithm can also be trained for feature selection [3]. The methodology for the proposed idea can easily be devised by assigning the following logical modules. These modules are logical only since different platforms like Android, Windows, and iOS, etc. use different implementation mechanisms.

- Learned Pattern

- Event Matcher

- Component Manager

Once the main patterns are observed, learned, and then stored, patterns-data can be supervised before implementation. The first module will not only be handling the learned patterns but will also be updated if a significant change in existing patterns is observed (depending on the algorithm in use). From the learned patterns, if selected for implementation, the corresponding component from the list will be selected along with arguments.

For example, if there is a pattern where the Call is associated, the Call component will be selected. Afterward, the corresponding argument will be associated similar to a function Call with an argument. The second module will handle the selection of components and its argument. In this case, the argument for the call group will by a contact number from the contact list. A contact number will also be the argument of the message group and so on. The combination of component and its argument will be a call to the next phase where the component manager module can handle the screen intact area. The third module will receive the component ID and its associated argument and will make decisions regarding offering an option on the screen.

The algorithm should take three factors into account while taking such decisions.

- Appropriate sequence: it should check the current status of the screen. If it is in use and active for another application, the offer message/button/or any other option should appear in an alert style. If the screen is not in use, may be locked or unlocked, the alert should accompany ready options.

- Alert Contents: The alert message should have corresponding options only.

- Placement: if the screen is already in use, the alert message should appear at the top layer with the Cancel options.

Future Guidelines for Interface Designers

The proposed idea presented in this article can be used and exploited to bridge the gap between the user and machine which is now a long-awaited issue. It can play a vital role in the emerging scenario where wearable devices will take place of existing computing gadgets and even hand-held devices. The prominent advantage of wearable devices is that users want minimum interaction for a specific task. The fact is, that a pedometer application can be used on a Smart Phone but still users want wearable devices to wear on their arms without worrying about turning on the different options on the phone.

For the implementation of the proposed idea, various types of tools, algorithms, and techniques are available. It is only a matter of integrating them to achieve the desired goal for a specific device and software. It is merely impossible to use a single generic application, technique, algorithm, or combination to achieve higher usability and hence the overall efficiency of a system. The main reason is the human nature of not accepting changes easily, so it is the software to adapt itself according to the user’s behavior.

Key Takeaways

- The interface gap between users and machines directly degrades the overall efficiency of the software.

- To minimize this gap, the software should adapt automatically its interfaces according to the users’ behavior.

- Machine learning techniques can be used to learn and update the behavior patterns of users.

Recommended Reading

- Aykin, N. (Ed.). (2016). Usability and internationalization of information technology. CRC Press.

- Lee, D., Moon, J., Kim, Y. J., & Mun, Y. Y. (2015). Antecedents and consequences of mobile phone usability: Linking simplicity and interactivity to satisfaction, trust, and brand loyalty. Information & Management, 52(3), 295-304.

- Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., … & Vanderplas, J. (2011). Scikit-learn: Machine learning in Python. Journal of Machine Learning Research, 12(Oct), 2825-2830.

- Le Thi, H. A., Le, H. M., & Dinh, T. P. (2015). Feature selection in machine learning: an exact penalty approach using a difference of convex function algorithm. Machine Learning, 101(1-3), 163-186.